What Is Inter-Rater Reliability? | Definition & Examples

Inter-rater reliability is the degree of agreement or consistency between two or more raters evaluating the same phenomenon, behavior, or data.

In research, it plays a crucial role in ensuring that findings are objective, reproducible, and not dependent on a single observer’s judgment. Whether you’re analyzing questionnaire responses, coding interview transcripts, or assessing behavioral outcomes, strong inter-rater reliability boosts the credibility of your results.

The method to calculate inter-rater reliability depends on the type of data (e.g., nominal, ordinal, interval, or ratio) and the number of raters. The most common methods include Cohen’s kappa (for two raters), Fleiss’ kappa (for three or more raters), and the Intraclass Correlation Coefficient (for continuous data).

During the thematic analysis, each researcher independently reads the transcripts and assigns codes to relevant passages. If the coders consistently label the same responses with the same themes, the study demonstrates high inter-rater reliability. This consistency ensures that the findings reflect real patterns in the participants’ experiences, rather than subjective interpretations or biases from individual researchers. This helps reduce observer bias.

Inter-rater reliability examples

Inter-rater reliability is a critical concept across many fields because it ensures that measurements and observations are consistent and trustworthy, regardless of who is conducting the assessment.

By using reliable coding, scoring, or evaluation methods, researchers can reduce subjective bias and strengthen the validity of their findings.

Inter-rater reliability in psychology example

By calculating inter-rater reliability, the researchers ensured that the behavioral ratings were consistent across observers and not influenced by individual bias. High inter-rater reliability supports the internal validity of the study, confirming that observed differences truly reflect the experimental conditions rather than the subjective judgments.

Inter-rater reliability in healthcare example

Multiple nurses independently score each patient, and the inter-rater reliability is calculated to ensure that the ratings are consistent across observers. The consistency is crucial for monitoring recovery trends, guiding treatment decisions, and comparing outcomes across different hospitals. It also supports the study’s external validity, making it easier to generalize findings to the broader population of postoperative patients.

Inter- vs intra-rater reliability

When discussing reliability, it’s important to distinguish between inter-rater and intra-rater reliability, as both play crucial roles in ensuring accurate and consistent measurements in research.

- Inter-rater reliability measures the consistency of ratings across different observers assessing the same phenomenon. It ensures that findings are objective and reproducible, regardless of who collects the data.

- Intra-rater reliability measures the consistency of a single observer when assessing the same phenomenon multiple times. It ensures that an individual rater’s measurements are stable and not influenced by fatigue, memory, or subjective shifts.

Inter-rater reliability

Three different nurses independently assess the same group of 20 patients at the same time. The researchers calculate the ICC to determine how consistently the different nurses rate each patient. High inter-rater reliability indicates that the pain scores are not dependent on which nurse is observing.

Intra-rater reliability

One of the nurses re-evaluates the same patients’ pain levels two days later without referencing the previous scores. The researcher calculates ICC to see if this nurse’s ratings are consistent over time. High intra-rater reliability indicates that the nurse’s scoring is stable.

How to calculate inter-rater reliability

The method to calculate inter-rater reliability depends on the type of data and the number of raters. The goal is to determine how consistently different observers assess the same phenomenon. Using the right formula ensures your measurements are reliable, reproducible, and credible.

The four most common methods are:

- Cohen’s kappa (two raters, categorical data)

- Fleiss’ kappa (three or more raters, categorical data)

- Intraclass Correlation Coefficient (continuous data)

- Percent agreement (simple, but limited, method)

Cohen’s kappa

Cohen’s kappa (κ) is used when two raters classify items into categories (nominal data or ordinal data). It accounts for the agreement expected by chance, providing a more accurate measure than simple percent agreement.

The formula for Cohen’s Kappa is:

Where:

- Po = observed proportion of agreement between raters

- Pe = expected agreement by chance

The results look like this:

| Rater 2: Anxious | Rater 2: Not anxious | Total | |

| Rater 1: Anxious | 20 | 5 | 25 |

| Rater 1: Not anxious | 10 | 15 | 25 |

| Total | 30 | 20 | 50 |

-

- Calculate observed agreement (Po)

-

- Calculate expected agreement (Pe)

-

- Apply Cohen’s kappa formula

A kappa of 0.40 indicates only moderate agreement between the two observers. To ensure more robust results, they could set up training sessions or better guidelines before conducting another study.

Fleiss’ kappa

When three or more raters are involved for categorical data, Fleiss’ kappa extends Cohen’s approach to handle multiple observers.

To test this, five raters (medical students) each review 20 skin lesion photographs and classify them as either:

- Likely benign

- Possibly malignant

- Clearly malignant

Since there are more than two raters, the researchers use Fleiss’ kappa to measure agreement across the multiple observers.

A simplified version of the data might look like this (the data for 5 out of the 20 images is shown here):

| Image | Likely benign | Possibly malignant | Clearly malignant | Total raters |

|---|---|---|---|---|

| 1 | 3 | 2 | 0 | 5 |

| 2 | 0 | 4 | 1 | 5 |

| 3 | 1 | 1 | 3 | 5 |

| 4 | 5 | 0 | 0 | 5 |

| 5 | 2 | 2 | 1 | 5 |

Step 1: Calculate agreement for each item

For each image, Fleiss’ kappa looks at how many pairs of raters agreed on the same category. The formula for the proportion of agreement for each image is:

Where:

- n = number of raters

- nij = number of raters who chose category j for image i

For example, for image 1:

Each image gets its own Pi and then you average them to get P, the mean observed agreement across all items.

Step 2: Calculate the expected agreement

Next, compute how often each category is chosen overall (across all raters and items). For example, across all 20 images:

- Likely benign: 40% of all ratings

- Possibly malignant: 35% of all ratings

- Clearly malignant: 25% of all ratings

Then calculate:

Step 3: Apply Fleiss’ kappa formula

The standard formula to calculate Fleiss’ kappa is:

If the average observed agreement (P) across all items is 0.60:

A κ of 0.39 indicates fair agreement among the five medical students.

Intraclass Correlation Coefficient (ICC)

The Intraclass Correlation Coefficient (ICC) is used when the intra-rater reliability is calculated for continuous data.

The ICC quantifies how much of the total variance in the measurements is due to true differences between subjects versus differences caused by raters or random error.

- A high ICC (close to 1) means most of the variation reflects real differences between patients.

- A low ICC means raters are inconsistent, so much of the variation is due to measurement error.

Because the data is continuous (measured on a ratio scale) and there are multiple raters assessing the same subjects over time, the researchers use the ICC to measure inter-rater reliability.

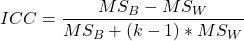

There are several ICC models (depending on whether raters are fixed or random), but the most common is the two-way random effects model, which assumes all raters are randomly selected and rate all subjects. The formula for this model is:

Where:

- MSB = mean square between subjects (variation among subjects)

- MSW = mean square within subjects (error + rater variance)

- k = number of raters

These values come from a two-way ANOVA table.

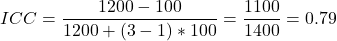

Sample calculation

Suppose 10 patients are each rated by 3 physiotherapists on knee flexion (in degrees). After conducting the ANOVA, the researchers find:

- MSB = 1200

- MSW = 100

- k = 3

The ICC of 0.79 indicates good agreement among the physiotherapists.

Percent agreement

The percent agreement method is the simplest way to assess inter-rater reliability. It measures the proportion of times that raters agree on a category or rating, without accounting for chance agreement like kappa does. This makes it less suitable for scientific research, but it is useful for preliminary checks or simple observational studies.

A simplified table of agreements might look like this:

| Rater 2: Correct | Rater 2: Incorrect | Total | |

| Rater 1: Correct | 15 | 3 | 18 |

| Rater 2: Incorrect | 2 | 10 | 12 |

| Total | 17 | 13 | 30 |

The formula to calculate percent agreement is:

The number of agreements (where they both chose correct or where they both chose incorrect) is 15 + 10 (= 25).

This means that in 83.3% of the cases, the observers gave the same rating.

Frequently asked questions about inter-rater reliability

- What is the formula for calculating inter-rater reliability?

-

There isn’t just one formula for calculating inter-rater reliability. The right one depends on your data type (e.g., nominal data, ordinal data) and the number of raters.

- Cohen’s kappa (κ) is commonly used for two raters

- Fleiss’ kappa is typically used for three or more raters

- The Intraclass Correlation Coefficient (ICC) is used for continuous data (interval or ratio). This is based on analysis of variance (ANOVA)

The most used formula (for Cohen’s kappa) is:

Po is the observed proportion of agreement, and Pe stands for the expected agreement by chance. - What is inter-rater reliability in psychology?

-

In psychology, inter-rater reliability refers to the degree of agreement between different observers or raters who evaluate the same behavior, test, or phenomenon.

It ensures that measurements are consistent, objective, and not dependent on a single person’s judgment, which is especially important in research, clinical assessments, and behavioral studies.

High inter-rater reliability indicates that results are dependable and reproducible across different raters.

- What is a good inter-rater reliability score?

-

A good inter-rater reliability score depends on the statistic used and the context of the study.

For Cohen’s kappa (two raters), common guidelines are:

- < 0.20: Poor agreement

- 0.21–0.40: Fair agreement

- 0.41–0.60: Moderate agreement

- 0.61–0.80: Substantial agreement

- 0.81–1.00: Almost perfect agreement

For the Intraclass Correlation Coefficient (interval or ratio data), similar thresholds are used:

- < 0.50: Poor agreement

- 0.51–0.75: Moderate agreement

- 0.76–0.90: Good agreement

- > 0.91: Excellent agreement

Cite this Quillbot article

We encourage the use of reliable sources in all types of writing. You can copy and paste the citation or click the "Cite this article" button to automatically add it to our free Citation Generator.

Merkus, J. (2025, October 24). What Is Inter-Rater Reliability? | Definition & Examples. Quillbot. Retrieved February 2, 2026, from https://quillbot.com/blog/research/inter-rater-reliability/